Weekly Roundup: Data Annotators, PRC's AI Strategy, and OMB's New Rules

A short reading list providing in-depth analyses on some of the biggest stories of 2024

It’s been a relatively slow news cycle for AI regulation, but hardly over the past couple of months. Here are some comprehensive recaps and in-depth analyses of recent headlines:

From The Economist on trust-busting lawsuits:

Trustbusters have big tech in their sights. On March 25th the European Commission opened a probe into Apple, Alphabet (Google’s parent company) and Meta (which is Facebook’s). Regulators in Brussels think the measures which the American technology behemoths have put in place to comply with the Digital Markets Act, a sweeping new law meant to ensure fair competition in the EU’s tech industry, are not up to scratch.

Days earlier America’s Department of Justice, along with attorneys-general from 16 states, sued Apple in what could be the most ambitious case brought against American tech since the DoJ battled Microsoft a quarter-century ago. It alleges that the iPhone-maker uses a monopoly position in smartphones to “thwart” innovation, “throttle” competitors and discourage users from buying rival devices. Apple denies wrongdoing.

The Wall Street Journal on AI companies’ legal liability:

“If in the coming years we wind up using AI the way most commentators expect, by leaning on it to outsource a lot of our content and judgment calls, I don’t think companies will be able to escape some form of liability,” says Jane Bambauer, a law professor at the University of Florida who has written about these issues.

The implications of this are momentous. Every company that uses generative AI could be responsible under laws that govern liability for harmful speech, and laws governing liability for defective products—since today’s AIs are both creators of speech and products. Some legal experts say this may create a flood of lawsuits for companies of all sizes.

Lessons from GDPR for AI Policymaking

This paper, written in part by my former professor, Josephine Wolff, “aims to address two research questions around AI policy: (1) How are LLMs like ChatGPT shifting the policy discussions around AI regulations? (2) What lessons can regulators learn from the EU’s General Data Protection Regulation (GDPR) and other data protection policymaking efforts that can be applied to AI policymaking?”

Part of its argument is “that the extent to which the proposed AI Act relies on self-regulation and the technical complexity of enforcement are likely to pose significant challenges to enforcement based on the implementation of the most technologically and self-regulation-focused elements of GDPR.”

Two Substacks on China’s AI Strategy

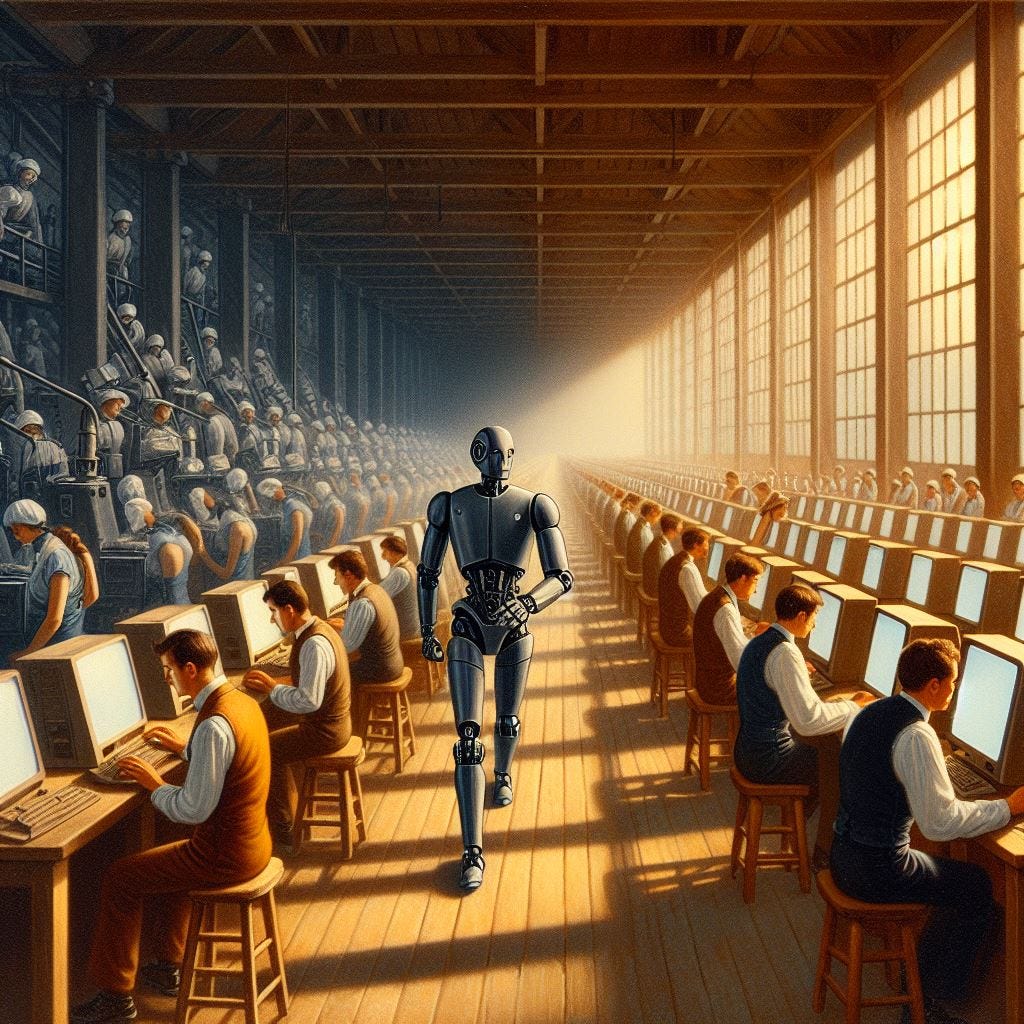

Let us not forget the humble data annotator

Artificial Intelligence increasingly automates and optimizes tasks, yet it is built on the back of meticulous, morose, and mind-numbing labor. Every link in this well-sourced story is worth a follow-on click. It provides damning evidence of AI’s extractive relationship with other countries—a fact even more reprehensible since similar jobs were known to take a huge psychological toll on American workers.

Companies with billions in the bank, like OpenAI, have relied on annotators in third-world countries paid only a few dollars per hour. Some of these annotators are exposed to highly disturbing content, like graphic imagery, yet aren’t given time off (as they’re usually contractors) or access to mental health resources.

An excellent piece in NY Mag peels back the curtains on Scale AI in particular, which recruits annotators in countries as far-flung as Nairobi and Kenya. Some of the tasks on Scale AI take labelers multiple eight-hour workdays — no breaks — and pay as little as $10. And these workers are beholden to the whims of the platform. Annotators sometimes go long stretches without receiving work, or they’re unceremoniously booted off Scale AI — as happened to contractors in Thailand, Vietnam, Poland and Pakistan recently.

Some annotation and labeling platforms claim to provide “fair-trade” work. They’ve made it a central part of their branding in fact. But as MIT Tech Review’s Kate Kaye notes, there are no regulations, only weak industry standards for what ethical labeling work means — and companies’ own definitions vary widely.

Although the news cycle may have been slow, the Federal Government has not been inactive.

The White House’s Office of Management and Budget, which oversees federal agency performance and administers the federal budget, released new rules on how government agencies can use AI.

The policy includes a raft of requirements for systems that impact people’s safety or rights, such as AI systems that affect functioning of electrical grids, decisions about asylum status or government benefits.

Government agencies using those systems — other than intelligence agencies and AI in national security systems — will have to test the AI for performance in the real-world context where it’s fielded, assemble an AI impact assessment for systems and more by Dec. 1, or stop using the systems in question.

Agencies are also required to test systems affecting people’s rights for disparate impacts across demographic groups and notify individuals when AI meaningfully influences the outcome of negative decisions about them, like the denial of government benefits.

The OMB released a “Request for Information: Responsible Procurement of Artificial Intelligence in Government.” Comments are due April 29.

The National Institute of Standards and Technology’s new U.S. AI Safety Institute will undoubtedly support the OMB's rules. The New Director elaborated on the organization’s pillars as “testing and evaluation; safety and security protocols; and developing guidance focused on AI-generated content.”

The U.S. Department of Commerce is investigating the security risks of importing vehicles from adversarial nations. Newer cars are connected to the internet and often transmit loads of data without the user’s knowledge. The investigation comes at the heels of competition concerns surrounding China’s cheap EV and also genuine privacy concerns already playing out in U.S. courts.

In other emerging technology regulations, “The U.S. Cybersecurity and Infrastructure Security Agency on Wednesday published long-awaited draft rules on how critical-infrastructure companies must report cyberattacks to the government.”

Under the rules, companies that own and operate critical infrastructure would need to report significant cyberattacks within 72 hours and report ransom payments within 24 hours.

Companies have opposed reporting requirements, saying that assessing an attack early on is difficult. They also worry disclosing too many specifics may aid attackers by revealing details of incident-response processes and cyber defenses.

Businesses also point out they are subject to more than three-dozen reporting requirements across various federal agencies, plus state data-breach laws.

The rules apply to any company owning or operating systems the U.S. government classifies as critical infrastructure, such as healthcare, energy, manufacturing and financial services [and]...whose systems may be vital to a particular sector, such as service providers.

State governments have been active as well. Idaho, Indiana, New Mexico, Wisconsin, and Utah all passed laws this year requiring disclosing AI use in political campaigns. Utah “Requires the media to be embedded with tamper-evident digital content provenance that discloses the author, creator, and any other entities that subsequently altered the media and disclosure of the use of AI to create or edit the media.”

Embedding disclosures or “watermarking” is not a foolproof solution for identifying generative AI, although it is being widely adopted; the EU’s AI Act requires watermarking as well. “Watermarks for AI-generated text are easy to remove and can be stolen and copied, rendering them useless, researchers have found. They say these kinds of attacks discredit watermarks and can fool people into trusting text they shouldn’t.”

In other news

The White House placed more export controls on more types of AI chips to China.

A chatbot powered by Microsoft and trained to answer legal questions for small businesses in New York City is producing grossly incorrect advice. New York announced another AI initiative meant to detect weapons on subways. The company providing the technology was sued for misleading shareholders the day after Mayor Adam announced the initiative.

Instagram will modify its recommendation process for political posts. IG defines “political” as “potentially related to things like laws, elections, or social topics.” While this may seem a step in the right direction, in reality, it doesn’t fundamentally change the content moderation or recommendation process but only makes the “shadow banning” policy official.