Weekly Roundup: A Rough Holiday Season for IP

IP lawsuits and NIST get the New Year off to a running start. Plus, resources for emerging technology research.

In the final week of 2023 and the first week of 2024, battles over AI and IP led the way.

The New York Times sued OpenAI, saying that the AI giant “[seeks] to free-ride on the Times’s massive investment in its journalism by using it to build substitutive products without permission or payment.” The “unlawful use” of the paper’s “copyrighted news articles, in-depth investigations, opinion pieces, reviews, how-to guides, and more” to create artificial intelligence products “threatens The Times’s ability to provide that service.”

An analysis of the SAG-AFTRA strike-ending contract concludes that, given studios’ history of using computer technology in film production for things like supplementing extras (think LOTR battle scenes) and their vast troves of acting data (every movie they own the copyright for), the protections the unions won may not be very solid. It is not imperceivable that “synthetic actors” take more Hollywood jobs in 2026 when the union’s contract expires than they did this go around.

While not strictly AI regulation, Big Tech, the largest investor in AI, faces a multitude of anti-trust lawsuits that could curtail both their capital and their market share.

Google faces two lawsuits claiming its

“search engine is an unlawful monopoly” and

“claims it is an illegal monopolist in the market for brokering ads on the internet.”

While Meta, “unlawfully sought to suppress competition by buying up potential rivals such as the messaging platform WhatsApp and image-sharing app Instagram.”

Amazon’s FTC and state-backed lawsuit

[allege] Amazon unfairly promotes its own platform and services at the expense of third-party sellers who rely on the company’s e-commerce marketplace for distribution.

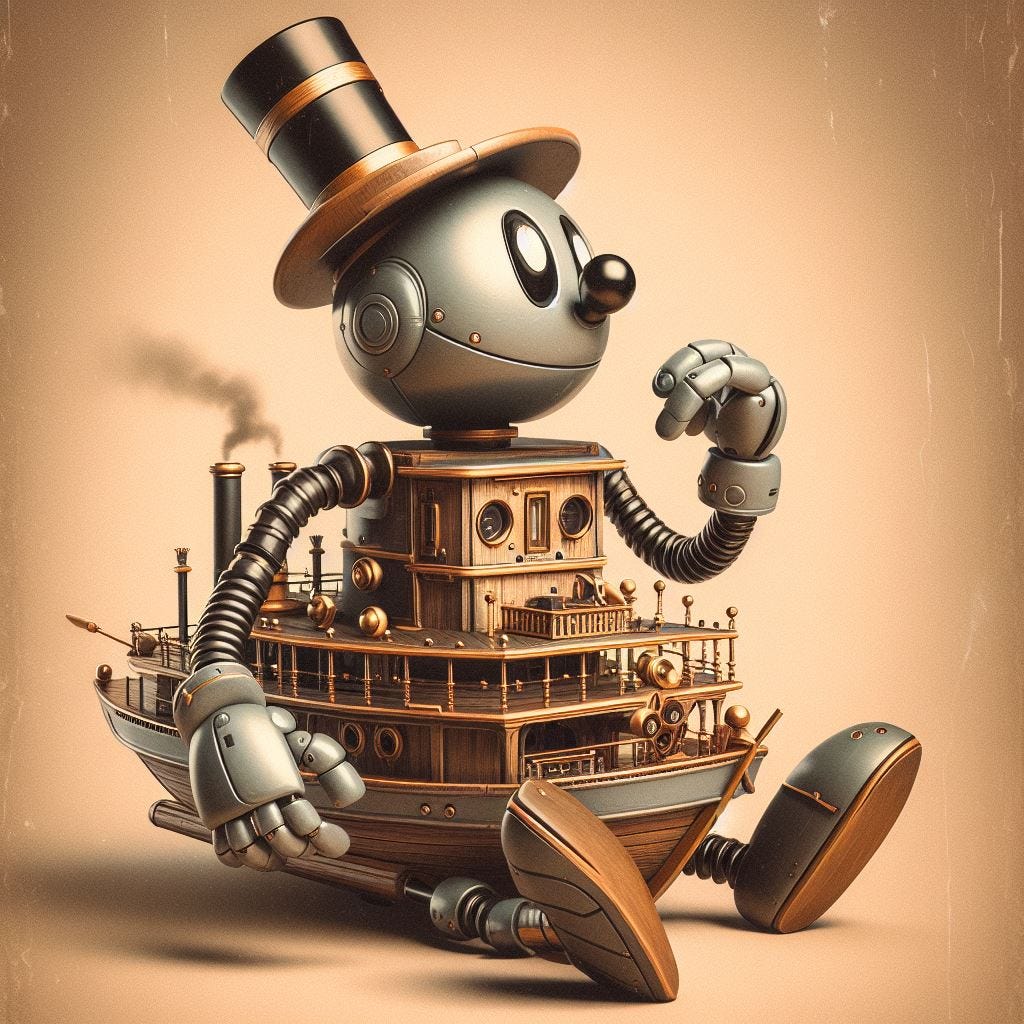

Rated Disney for adults

Steamboat Willie entered the public domain early this year, generating some pretty disturbing AI-generated fan fiction:

Within days, an explosion of homebrewed Steamboat Willie art hit the internet, including a horror movie trailer, a meme coin—and, of course, a glut of AI-generated Willies. Some are G-rated. Others, like “Creamboat Willie,” are decidedly not. (Willie doing drugs is another popular theme.)

Disney, being such a large corporation with strong branding tied to old IP, is in a somewhat unique situation. Yet the potential damage AI-generated images can do to its brand, and how fast, might worry the studio. Even for brands with current copyrights, the ease and quickness with which deep fakes can be disseminated mean the damage can be done long before a cease-and-desist order is issued. All of this, however, assumes consumers will believe and then react to offensive brand-based deep fakes.

Not all deepfakes are so grotesque, however. Last Christmas, Santa made it out ok, using advanced AI systems to cover more ground for a categorically more digital clientele.

In other news

Rite Aid was banned from using facial recognition technology for five years after its program misidentified tens of thousands of customers as “persons of interest.”

Robotaxis had a tough year, with a new report surfacing that Austin, TX, asked Cruise to ground its taxi service during Halloween out of fear for children trick-or-treating. In China, autonomous taxis are so unprofitable that companies are seeking out other revenue streams to stay afloat.

In the Federal Government

NIST has put out a call for information for the following three areas, which are due by February 2, 2024.

Developing Guidelines, Standards, and Best Practices for AI Safety and Security

Reducing the Risk of Synthetic Content

Advance Responsible Global Technical Standards for AI Development

NIST’s National Artificial Intelligence Advisory Committee Law Enforcement Subcommittee will hold an open in-person and virtual conference on January 19, 2024, from 1 to 2:30 p.m. EST. The meeting will report the working group’s findings, identify actionable recommendations, and discuss updates on goals and deliverables.

The FTC will host a virtual summit on January 25, 2024, focusing on ways to protect consumers and competition.

The FTC also “began accepting submissions for its Voice Cloning Challenge, which is aimed at promoting the development of ideas to protect consumers from the misuse of artificial intelligence-enabled voice cloning for fraud and other harms.”

In case you missed it

Two prominent voices in AI regulation published essays in the New York Times last week. The first, written by Congressman Ro Khanna, cautions his party against underestimating AI’s potential to create significant job loss by invoking the Davos conferences and globalization as a parallel example.

The second, written by Joy Buolamwini and Barry Friedman, laments the lack of federal oversight over AI in policing and offers alternatives.

Lastly,

Some valuable resources for emerging technology research:

The Center for Security and Emerging Technology consolidated all their research into one guide, with something for everyone in every sector.

The Emerging Technology Observatory, an organization that creates high-quality data tools, has interactive tools, maps, and resources that track emerging technology trends in different countries, open-source software reviewers, a Chinese-language research depository, supply-chain analysis, and more.