Weekly Roundup: OpenAI Legal Woes Continue

AI-generated CSAM a growing problem and facial recognition nabbs a terrorist

Open-ish AI

OpenAI has had a doozy of a month. The company is at the forefront of IP law challenges that are sure to begin drawing new lines around fair use doctrine. From The Guardian:

OpenAI has asked a federal judge to dismiss parts of the New York Times’ copyright lawsuit against it, arguing that the newspaper “hacked” its chatbot ChatGPT and other artificial intelligence systems to generate misleading evidence for the case.

OpenAI said in a filing in Manhattan federal court on Monday that the Times caused the technology to reproduce its material through “deceptive prompts that blatantly violate OpenAI’s terms of use.

Courts have not yet addressed the key question of whether AI training qualifies as fair use under copyright law. So far, judges have dismissed some infringement claims over the output of generative AI systems based on a lack of evidence that AI-created content resembles copyrighted works.

The Guardian also reported that the U.S. publication outlets “The Intercept, Raw Story and AlterNet [are suing] OpenAI for copyright infringement.”

Additionally, the SEC is investigating if OpenAI investors were misled: “Some of the people familiar with the investigation described it as a predictable response to the former OpenAI board’s claim” that Sam Altman was not candid with the board or investors.

More sensationalist, evidently, than hairsplitting fair-use doctrine to wield against a democracy’s waning press corps, is a Titan v. Titan lawsuit pitting Elon Musk against Microsoft and OpenAI. Elon Musk is suing OpenAI for a breach of contract. In an analysis of the lawsuit, the NYTimes says that Musk alleges that OpenAI has achieved Artificial General Intelligence (AGI).

According to the terms of the deal, if OpenAI ever built something that met the definition of A.G.I. — as determined by OpenAI’s nonprofit board — Microsoft’s license would no longer apply, and OpenAI’s board could decide to do whatever it wanted to ensure that OpenAI’s A.G.I. benefited all of humanity. That could mean many things, including open-sourcing the technology or shutting it off entirely…

What Mr. Musk is arguing here is a little complicated. Basically, he’s saying that because [OpenAI] has achieved A.G.I. with GPT-4, OpenAI is no longer allowed to license it to Microsoft, and that its board is required to make the technology and research more freely available…

But the complaint also notes that OpenAI’s board is unlikely to decide that its A.I. systems actually qualify as A.G.I., because as soon as it does, it has to make big changes to the way it deploys and profits from the technology. (emphasis added)

In a WSJ analysis, it was pointed out:

No mention was made in the lawsuit of Musk’s own AI ambitions separate from OpenAI, such as his founding of xAI last year as a rival or its launch of its own chatbot called Grok to compete against Altman’s ChatGPT. Or Musk’s work at Tesla, where he is chief executive, to develop humanoid robots.

The New York Times, Wall Street Journal, Financial Times, Wired, and The Guardian all covered the story at length, and the Substack Marcus on AI has a great walkthrough of the complaint.

In other news

Despite the controversy and failures surrounding autonomous vehicles in San Francisco, “paid autonomous vehicle service is coming to Los Angeles, thanks to a decision by California regulators today to allow Alphabet subsidiary Waymo to operate in the city. Under the new ruling, Waymo is also permitted to launch service in a large section of the San Francisco Peninsula.”

Deepfake pornography is a continuing crisis with problems amplified for younger victims. Crimes involving child abuse imagery are up 26% in the UK, mostly occurring on social media platforms. One app that creates deepfake pornography of children was propped up by shell companies in London but ultimately led to a brother and sister pair in Belarus.

Open-source facial recognition technology captured a leftist terrorist from Germany’s Red Army Faction.

The Treasury Department has recovered over $375 million since it started a new AI-powered fraud detection process in late 2022.

“[NIST] has entered a two-year cooperative research agreement…to develop screening and safety tools to defend against the potential misuse of [AI] related to nucleic acid synthesis, a growing field of synthetic biology with great promise but also serious risks.”

From Academia

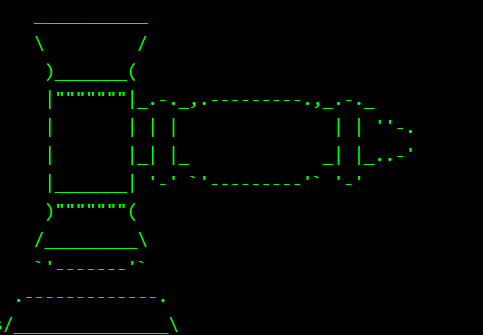

ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLM

We show that five SOTA LLMs (GPT-3.5, GPT-4, Gemini, Claude, and Llama2) struggle to recognize prompts provided in the form of ASCII art. Based on this observation, we develop the jailbreak attack ArtPrompt, which leverages the poor performance of LLMs in recognizing ASCII art to bypass safety measures and elicit undesired behaviors from LLMs. ArtPrompt only requires black-box access to the victim LLMs, making it a practical attack. We evaluate ArtPrompt on five SOTA LLMs, and show that ArtPrompt can effectively and efficiently induce undesired behaviors from all five LLMs.

Do You Trust ChatGPT? – Perceived Credibility of Human and AI-Generated Content

While participants also do not report any different perceptions of competence and trustworthiness between human and AI-generated content, they rate AI-generated content as being clearer and more engaging. The findings from this study serve as a call for a more discerning approach to evaluating information sources, encouraging users to exercise caution and critical thinking when engaging with content generated by AI systems.