Weekly Roundup: Music and the Robo-broker

Parts of the music industry embrace AI, highlights from this week's AI regulation news, and an in-depth look at the SEC rule regulating AI in the financial sector.

A playlist for singularity

Parts of the music industry are embracing AI. YouTube partnered with Universal Music Group to explore the future of music in the context of generative AI.

“Universal and YouTube plan to collaborate on product development, principles guiding the use of music in AI programs and new ways to pay artists whose work is used in AI-generated content.”

This partnership may help stem future IP lawsuits for YouTube, one of the benefits AI companies receive with some of their data partnerships.

But what benefits large companies doesn’t always translate into benefits for the user. The Verge article reporting the same story has several points on the imbalance of bargaining power between users and large AI companies, complex and messy IP laws, and criticizes the fact that AI companies scrape lots of other data for free anyway.

Data scraping has long been a contention between “owners” of data sources and those looking to access it. Data scraping survived being declared illegal in the United States, but it took a hit under the GDPR. A user-friendly guide on how to navigate scraping under the GDPR was published by The Markup this week.

Proactive governance

Hardly Inert. Congress will return next month with a lot of AI policy on its plate. Equally active is the judicial branch, with many active liability, privacy, and IP cases.

Schools begin to reverse generative AI bans. School districts, including Los Angeles and New York City, are beginning to remove generative AI bans and work toward policies incorporating AI technology into education.

Regulation isn’t always about prohibition. A California law passed last year allows public transit vehicles to mount cameras to enforce bus lane parking violations. Oakland will use AI to identify cars parked illegally in bus lanes and send an “evidence package” to police for ticketing.

Reactive market

Low-speed Nvidia chips are better for China than alternatives. While Nvidia has lowered processing speeds for chips it sells in China to comply with U.S. sanctions, the slower chips still outperform the best chips on the Chinese market.

Not quite open source. Meta’s Llama 2 claims to be open source but doesn’t fit the definition of “open source” most people think of when discussing software.

Breaking into the chip market is the goal of one startup in New Jersey. Their strategy is to “offer customized systems and a wider range of chips” for a “broad spectrum of AI applications.”

Using a system of light, MIT demonstrated “greater than 100-fold improvement in energy efficiency and a 25-fold improvement in compute density.” If this breakthrough is viable for large AI companies, it could allow more conventional chips to stay on the market and, therefore, be affordable and accessible to smaller companies.

Any sector requiring small onboard AI systems (like drones or AVs) will want to read the primer on technological constraints for onboard AI published by the Center for Security and Emerging Technology.

Automate advice, automate risk

Even though companies talk about using AI, not all of them do. According to the Financial Times:

Almost 40% of companies in the S&P 500 mentioned AI or related terms in earnings calls last quarter.

Yet, only 16% mentioned it in their corresponding regulatory filings.

The finance sector, however, is leveraging AI, but they face a consumer trust problem. Only 31% of consumers would trust advice from an AI tool without verification. That number jumps to 51% if verified by a financial planner, indicating the immediate future for financial sector AI may be internal use.

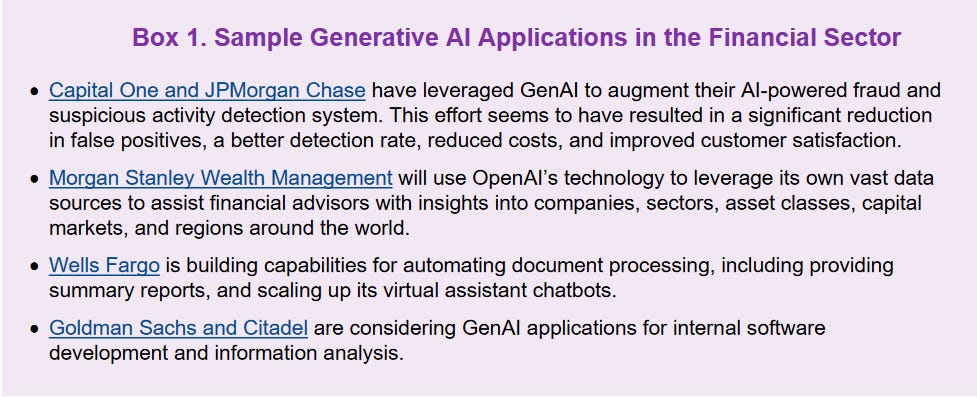

The International Monetary Fund (IMF) published a report Tuesday detailing use cases in the financial sector:

The report also details the risks of using AI in the financial sector:

Bias: AI has a poor track record of mimicking and exacerbating existing bias.

Compliance: How can a company comply with disclosure laws if it doesn’t understand the AI?

Stability: A mistake by AI in the financial sector could have global market implications.

Cybersecurity: Prompt injection and data poisoning pose unique risks that are still unsolved.

Unautomated compliance

The Securities and Exchange Commission (SEC) shares some of the same concerns as the IMF. In particular, the SEC is worried that current regulations requiring companies to disclose conflicts of interest and take steps to mitigate those conflicts will be rendered ineffective by AI.

The SEC proposed a rule on August 8 to update an existing regulatory framework by incorporating emerging technology.

Financial firms are already required to disclose conflicts of interest, exercise reasonable diligence in making recommendations to clients, and keep records. The new rule makes firms responsible for their AI in the same way.

It uses the term Predictive Data Analytics (PDA) technology to allow the rule to be flexible for future emerging technologies. In short, AI falls under PDA.

The SEC is attempting to avoid problems that will inevitably arise due to AI’s inherent weaknesses, like model drift, poor heuristics, poor training data, and opaque learning processes. These intrinsic weaknesses may cause PDA technologies to promote the firm's profits over the customers.

Compounding these problems is speed. With many companies using chatbots and mobile apps, advice containing conflicts of interest could be irretrievably transmitted quickly.

Like the original rule, the updated rule focuses primarily on disclosures and audits. It puts the onus on users of PDA to:

Evaluate any use or reasonably foreseeable potential use by the firm or its associated person of a covered technology in any investor interaction.

Identify any conflict of interest associated with that use or potential use.

Determine whether any such conflict of interest places or results in placing the firm's or its associated person's interest ahead of the interest of investors.

Eliminate or neutralize the effect of those conflicts of interest that place the firm's or its associated person's interest ahead of the interest of investors.

Although the onus is placed on the firm, the rule gives plenty of latitude in how the firm can audit AI. For example, the “evaluate and identify” portion of the rule does not mandate a specific type of evaluation process. It encourages firms to consider the circumstances in which the technology is used and the type of technology.

So long as the firm has taken steps that are sufficient under the circumstances to evaluate its use or reasonably foreseeable potential use of the covered technology in investor interactions and identify any conflicts of interest associated with that use or potential use, this aspect of the proposed conflicts rules would be satisfied.

For firms that use third-party software, reviewing the third party’s documentation is sufficient “provided that the other documentation regarding how the technology functions is sufficiently detailed as to how the technology works.” Again, the onus is on the firms to at least understand the technology well enough to know how thorough the third-party documentation is.

For complex machine learning systems with large data sets and “black box” deep learning, the rule is clear: “A firm's lack of visibility [into the technological function] would not absolve it of the responsibility to use a covered technology in investor interactions in compliance with the proposed conflicts rules.”

An additional requirement is initial and reoccurring testing of the implemented technology. Like the “evaluate and identify” portion of the rule, companies have no rigid formula to follow.

Regulating high-risk sectors

Regulating high-risk sectors is a difficult task. Many of the items the SEC proposes are similar to those working on regulating autonomous weapons. The rules may be burdensome and may make automizing some uses of AI unfeasible, but so too is the risk associated with failure.

One of the significant challenges implementing this rule will face is measuring the effectiveness of an audit. Even the best-intentioned, technically savvy auditor may have next to no industry standard or best practices to look toward when creating and evaluating these audits. The same problem exists for the regulator, just from the other side of the table.

The National Institute of Standards and Technology (NIST) is on the right track with its AI research, but the sooner it can release a portfolio of measurements, the easier complying with this rule will be.

The proposed rule is open for comment until October 10. Here is how to write a public comment.

Lastly, there is a new Calendar page for upcoming AI events. If you have something you would like added, please email me!